Learn PyTorch Multi-GPU properly. I'm Matthew, a carrot market machine… | by The Black Knight | Medium

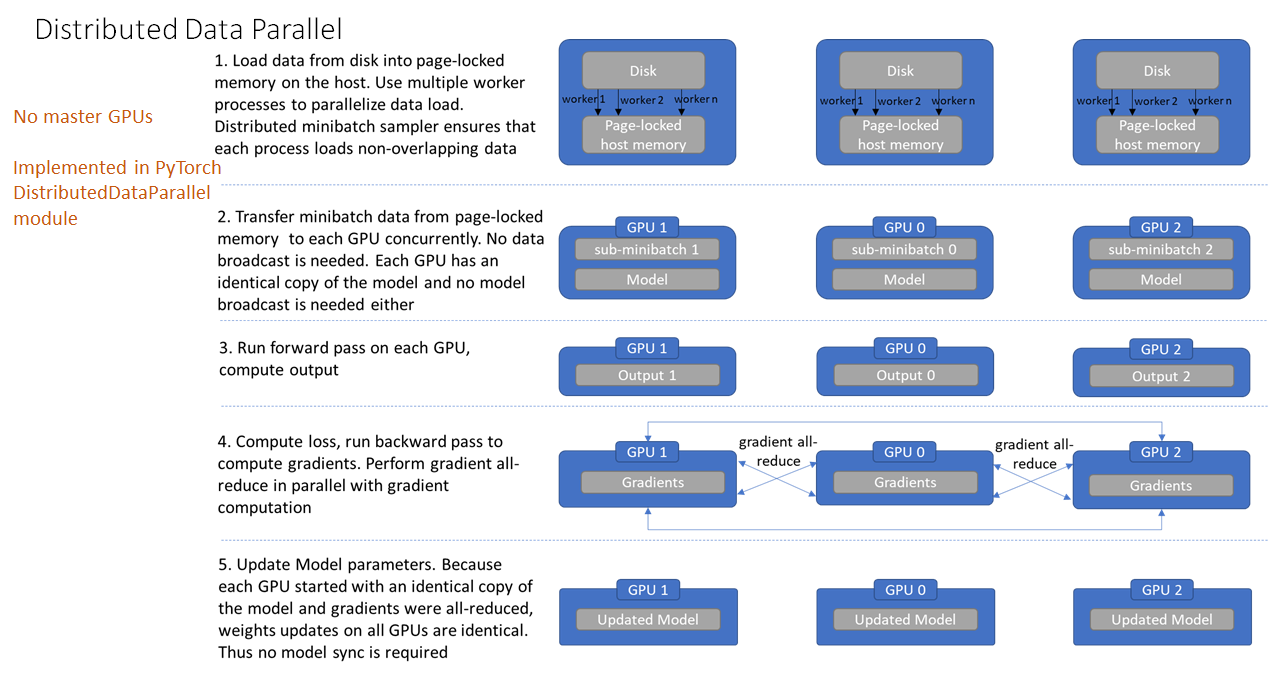

MONAI v0.3 brings GPU acceleration through Auto Mixed Precision (AMP), Distributed Data Parallelism (DDP), and new network architectures | by MONAI Medical Open Network for AI | PyTorch | Medium

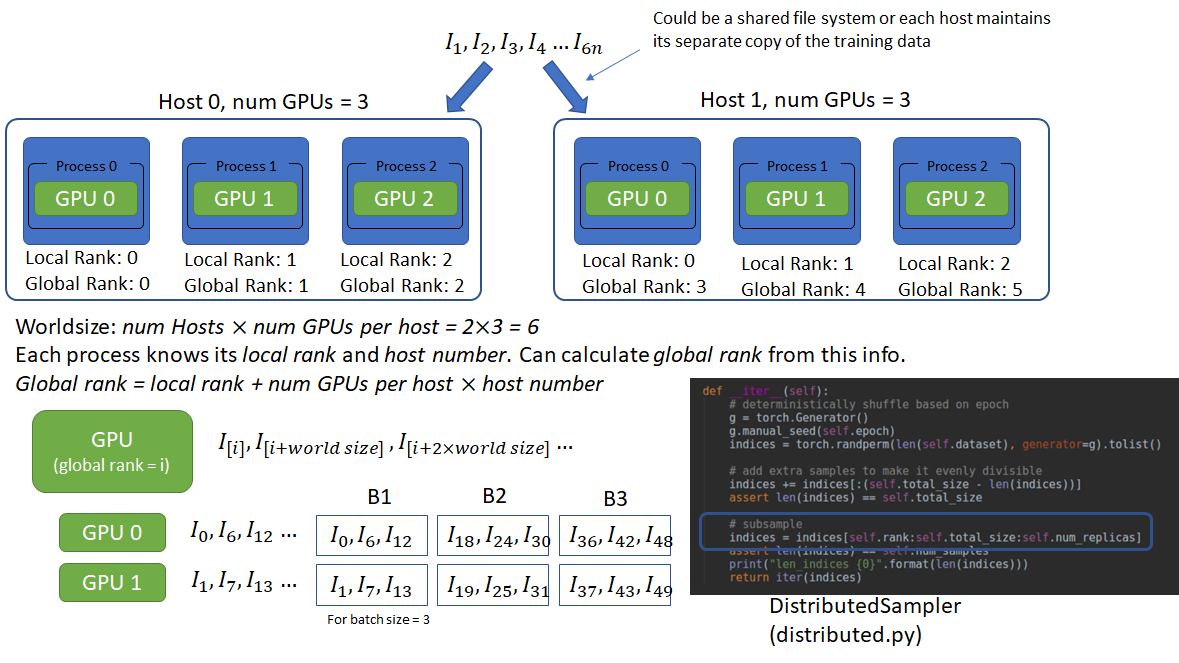

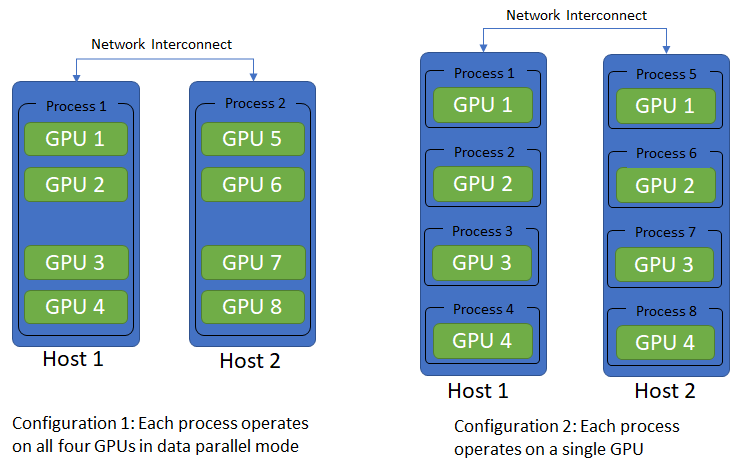

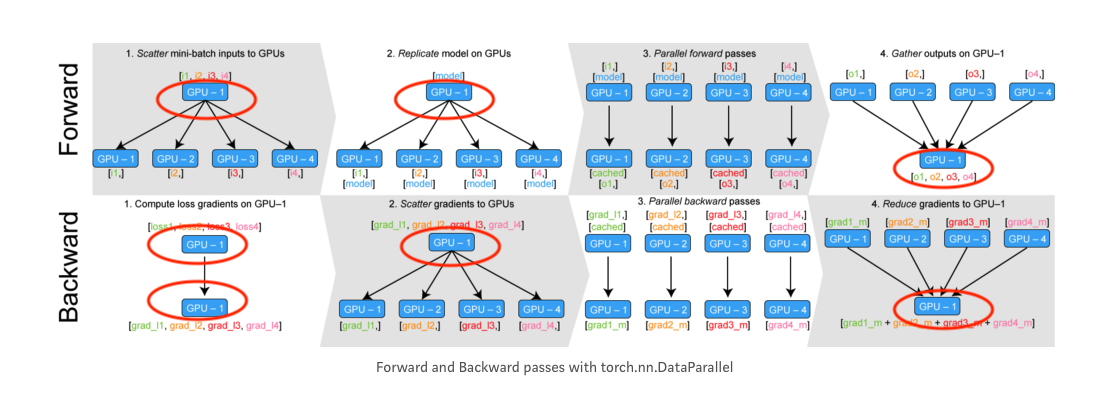

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer